Dr Dries Smit

I am an AI researcher specialising in reinforcement learning, multi-agent systems, and large language models. My work focuses on applying these models in the medical and financial domains, designing multi-agent frameworks, improving foundational models, and leading various projects. I also led the team behind Laila, a fine-tuned version of Llama 3.1 (405B, 70B, and 8B) that assists biologists by interfacing with real lab equipment. My recent achievements include placing 1st in the ARC-AGI-3 Preview Competition, and together with the team at Tufa Labs we achieved 3rd in ARC-AGI-2 out of 1454 teams, underscoring our commitment to high-impact research. Currently, I am conducting fundamental AI research in large-scale training and reinforcement learning.

Experience

Fundamental Researcher

Working on fundamental AI research in reinforcement learning and large-scale training.

Research Scientist

I helped design a high-performance multi-agent reinforcement learning framework, Mava. I also led the team behind Laila, a fine-tuned version of Llama 3.1 designed to assist biologists by interfacing with real lab equipment. Additionally, I led a team in developing an assistant that autonomously experiments with internal repositories to discover code improvements, optimising for downstream performance metrics.

Internship - Machine Learning Research

Conducted research on cutting-edge machine learning algorithms for biomedical applications, including optical character recognition, computer vision, and 3D body tracking, during three separate internship periods.

Machine Learning Engineer

Conducted research on various machine learning recommender systems, culminating in a presentation on their potential applications within the company.

Education

PhD in Electrical and Electronic Engineering

Doctorate focusing on reinforcement learning in multi-agent systems, completed under the supervision of Prof. Willie Brink, Prof. Herman A. Engelbrecht, and Dr Arnu Pretorius.

Master's in Electrical and Electronic Engineering

Graduated with a Cum Laude award. Research project focused on integrating deep neural networks with probabilistic models, supervised by Prof. Johan du Preez.

Bachelor's in Electrical and Electronic Engineering

Graduated with multiple Cum Laude awards for academic excellence.

Skills & Languages

Skills

- Programming Languages: Python, Java, MATLAB, C, C++

- Machine Learning Expertise: Reinforcement learning, computer vision, genetic algorithms, large language models

- Project Leadership: Leading research teams in high-impact, cross-disciplinary projects

Languages

- English (speak, read, write)

- Afrikaans (speak, read, write)

Awards

- Achieved 1st place globally in the ARC-AGI-3 Agent Preview Competition (2025) - Details | Blog Post

- Achieved 3rd place globally in the ARC-AGI-2 Competition (2025) - Details

- Cum Laude award for Master's in Electrical and Electronic Engineering

- 4 x Cum Laude award for Bachelor's in Electrical and Electronic Engineering

- Golden Key Membership for academic achievements in the top 15% of undergraduate students

- Academic award for above 85% average in high school (matric)

Publications

-

2024 (ICML main paper):

Should we be going MAD? Benchmarking Multi-Agent Debate between Language Models for Medical Q&A -

2024 (ICML workshop):

Generative Model for Small Molecules with Latent Space RL Fine-Tuning to Protein Targets -

2023 (NeurIPS workshop):

Offline RL for Generative Design of Protein Binders -

2023 (NeurIPS workshop):

Are we going MAD? Benchmarking Multi-Agent Debate between Language Models for Medical Q&A -

2023 (ICLR workshop):

Jumanji: A Diverse Suite of Scalable Reinforcement Learning Environments in JAX -

2023 (JAAMAS main paper):

Scaling Multi-Agent Reinforcement Learning to Full 11 Versus 11 Simulated Robotic Football -

2022 (PhD):

Scaling Multi-Agent Reinforcement Learning to Eleven Aside Simulated Robot Soccer -

2021 (arXiv):

Mava: A Research Framework for Distributed Multi-Agent Reinforcement Learning -

2020 (Masters):

Merging Deep Neural Networks and Probabilistic Models using Sum Product Networks

Projects

AutoGrader

For my final six months of undergraduate engineering studies, I developed a test grading system for the Applied Mathematics Department to replace the costly, custom multiple-choice templates. This system automatically detects handwritten student numbers, surnames, numeric responses, and multiple-choice answers, relying on a core data-driven machine learning pipeline. The most challenging aspect of this project was setting up a scalable and robust data pipeline. It needed to be resilient to human error, which can significantly impact model performance, while also being scalable enough to handle the entire university's workload. I received the Magnum Cum Laude award for this work, and the system was adopted by the department and later by the entire university. Over the years, I have continued improving the system over multiple versions:

Version 1 (2016-2017): Focused on using a radon transform to locate the answer sheet template. Probabilistic graphical models (PGMs) were used to interpret filled-in bubbles, and a CNN identified digits. Read the report and learn more about PGMs.

Version 2 (2018-2019): A website was developed, allowing lecturers to upload tests and automatically grade them. However, manual checks were still necessary for uncertain cases.

Version 3 (2020-2023): A complete overhaul introduced QR codes for accurate positioning and a CNN-Transformer architecture for interpreting handwritten answers. The system continuously learns from lecturer corrections, improving its accuracy over time. The AutoGrade website is available here.

Version 4 (2024): The AutoGrader system has now been adopted by the entire University, marking a significant milestone in its development and implementation. Ongoing work is focused on incorporating new capabilities using the latest open-source foundational vision models, further enhancing the system's accuracy and versatility.

Curious Agents

A project series exploring curiosity-driven reinforcement learning methods. The series focuses on building RL agents without explicit reward functions:

Curious Agents: An Introduction: In the first blog post, I provide background motivation for curiosity-based exploration in RL.

Curious Agents II: Solving MountainCar without Rewards: This post demonstrates training an agent to solve MountainCar without providing external rewards.

Curious Agents III: BYOL-Explore: Next in the series, I implement DeepMind's BYOL-Explore and demonstrate its effectiveness on JAX-based environments.

Curious Agents IV: BYOL-Hindsight: The latest post discusses BYOL-Hindsight, which addresses limitations in previous curiosity-based algorithms. I tested the algorithm in a custom 2D Minecraft-like environment.

Updates

-

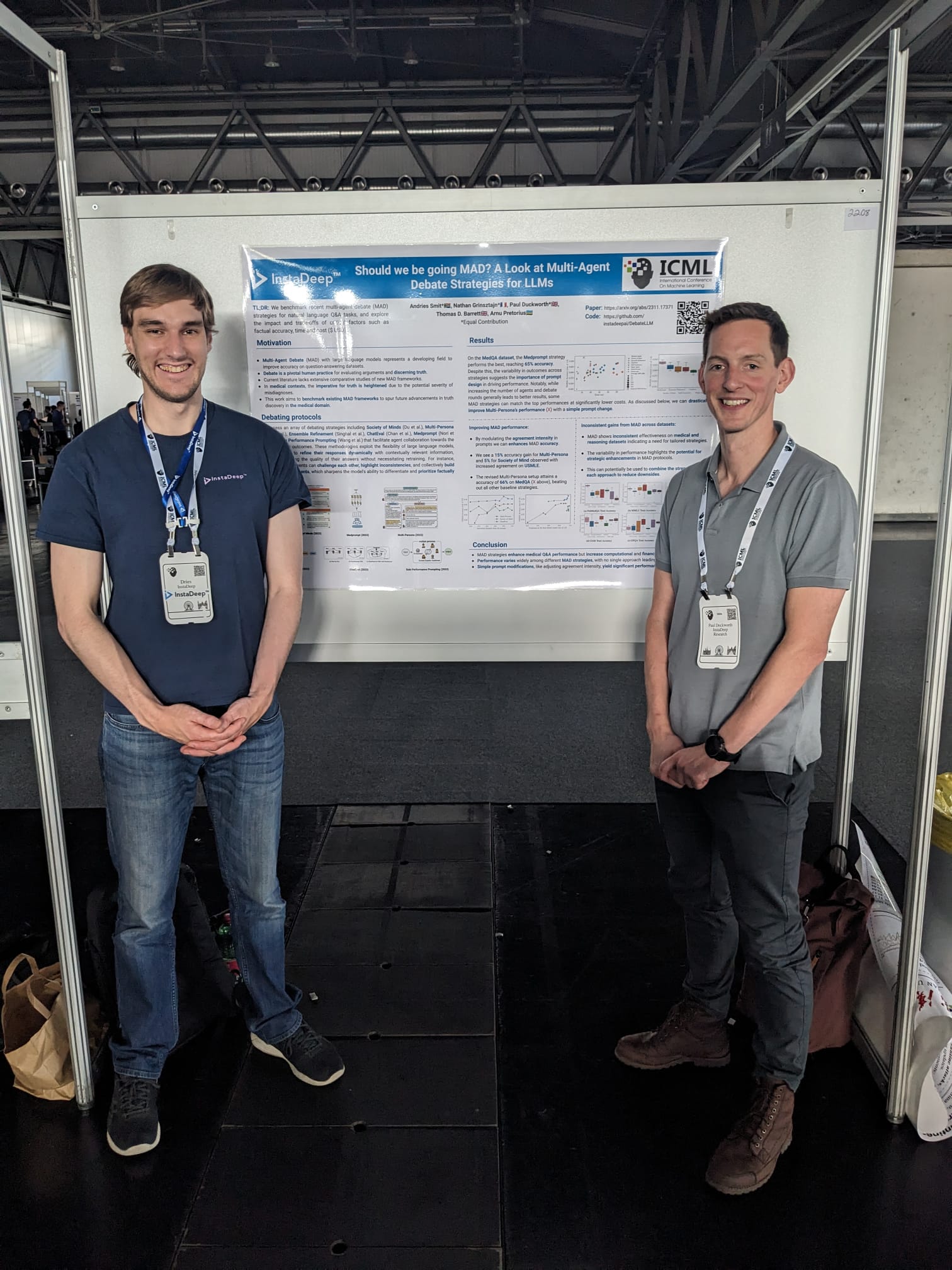

2024-07-21: Attended ICML 2024 in Vienna, Austria, presenting our work on multi-agent debate, offline RL for generative design, and offline multi-agent reinforcement learning (main authors could not attend).

-

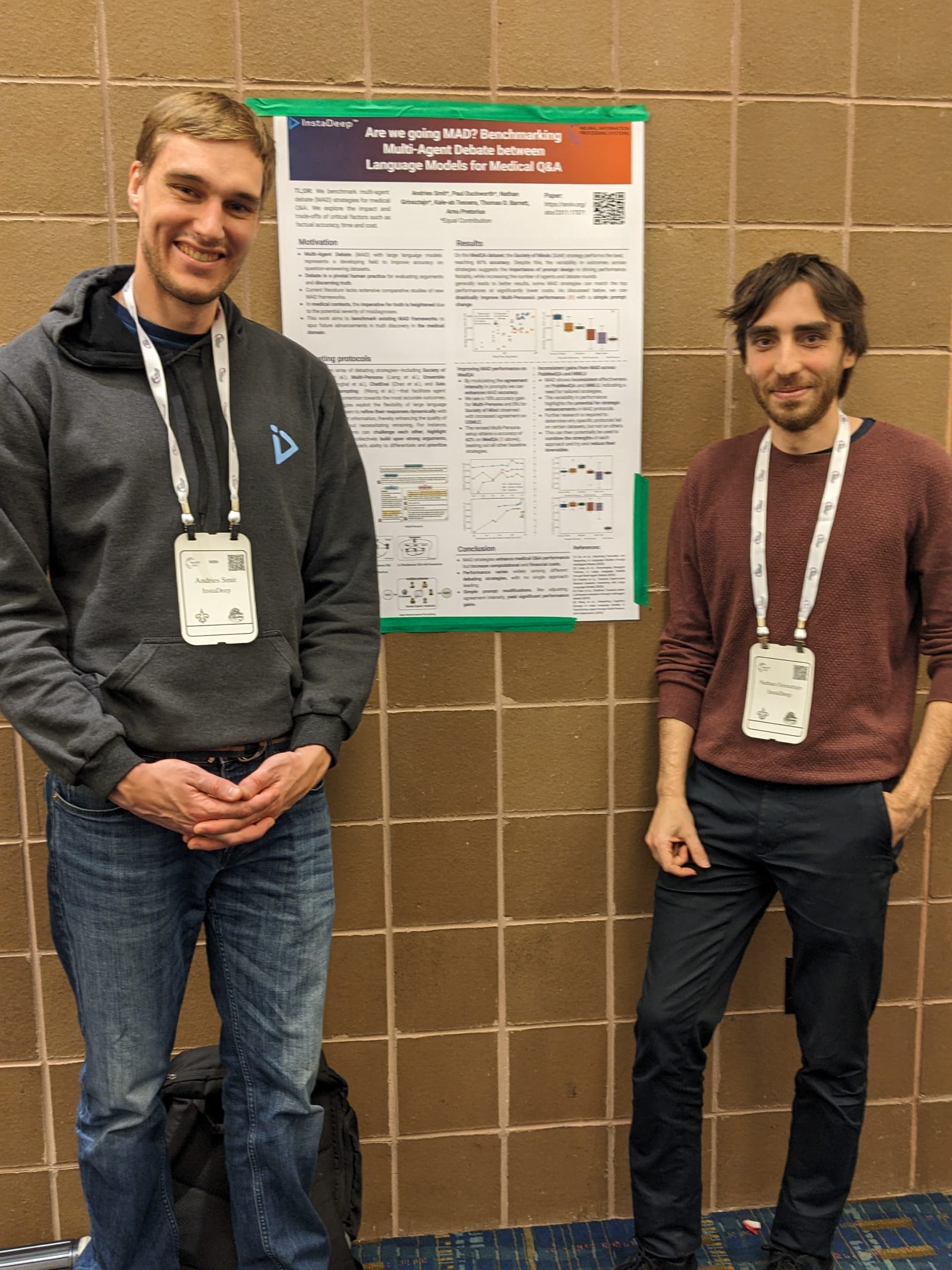

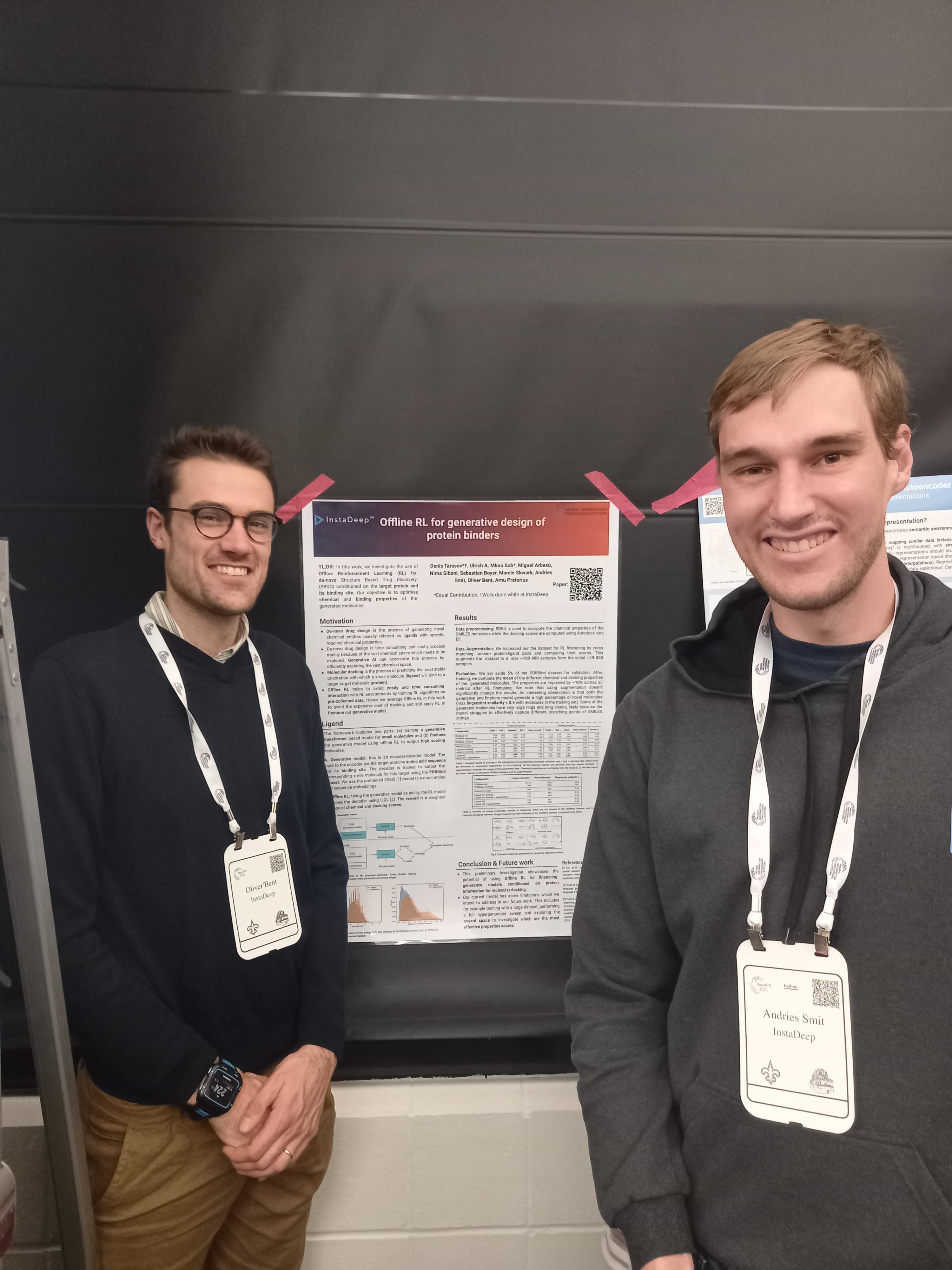

2023-12-10: Attended NeurIPS 2023 in New Orleans, Louisiana, USA, presenting our work on multi-agent debate and offline RL for generative design.

-

2022-12-12: Attended IndabaX in Pretoria, South Africa, presenting my PhD work on scaling simulated robotic soccer.

-

2022-10-19: Attended a company retreat in Turkey, Istanbul.

- 2022-10-19: Started a full-time position as a Research Scientist at InstaDeep after a two-year internship.

- 2020-03-08: Received my Master's degree (Cum Laude) in Electrical and Electronic Engineering from Stellenbosch University.

- 2018-12-06: Received my Bachelor's degree (Cum Laude) in Electrical and Electronic Engineering from Stellenbosch University.